I'm Melanie Subbiah.

I am a 6th year Computer Science PhD student, advised in NLP by Kathy McKeown at Columbia University. I work on language problems involving social reasoning and challenging subtext, with a focus on human narrative. Prior to grad school, I worked on GPT-3 at OpenAI. Alongside my NLP research, I am interested in AI for climate work and opportunities to speak about and teach NLP. I am on the job market for research faculty positions and post-docs.

Education

Columbia University

Ph.D. Candidate

Advisor: Kathleen McKeown

Awards:

- Best Paper Award for TACL 2024 (Reading Subtext: Evaluating Large Language Models on Short Story Summarization with Writers)

- Amazon CAIT PhD Fellowship 2023

- NSF GRFP Honorable Mention 2021 (Comp/IS/Eng - Natural Language Processing)

- Outstanding Paper Award at NeurIPS 2020 (Language Models are Few-Shot Learners)

- Presidential and SEAS Fellowship 2020

Columbia University

M.S. in Computer Science

Completed as part of MS/PhD

Williams College

B.A. in Computer Science, with highest honors

Graduated magna cum laude

Thesis: Using Text Abstraction and LSTM Language Models for Domain-Independent Narrative Generation

Advisor: Andrea Danyluk

Awards:

- Phi Beta Kappa Commencement Student Speaker

- Best Thesis Presentation award in Computer Science

- Magna Cum Laude, Phi Beta Kappa, Sigma Xi

- Honorable Mention award in short story writing

Work

Meta

Research Intern

Neural interfaces research - deep learning with EMG data.

OpenAI

Member of Technical Staff

Generative language models research - GPT-3 and the OpenAI API.

Apple

Machine Learning Research Engineer

Autonomous systems research - robotics, RL, computer vision, and NLP.

Research

H Mian*, M Subbiah*, S Marcus, N Shaalan, K McKeown. Computational Representations of Character Significance in Novels. In submission. 2026. > PDF

M Subbiah, A Mishra, G Kim, L Tang, G Durrett, K McKeown. Is the Top Still Spinning? Evaluating Subjectivity in Narrative Understanding. EMNLP. 2025. > PDF

M Limpijankit, Y Chen, M Subbiah, N Deas, K McKeown. Counterfactual Simulatability of LLM Explanations for Generation Tasks. INLG. 2025. > PDF

M Gupt, N Varimall, N Deas, M Subbiah, K McKeown. AdvSumm: Adversarial Training for Bias Mitigation in Text Summarization. NewSumm Workshop at EMNLP. 2025. > PDF

Z Hall, M Subbiah*, T Zollo, K McKeown, R Zemel. Guiding LLM Decision-Making with Fairness Reward Models. NeurIPS. 2025.

A Mayukha, M Subbiah, A Guzman, S Jitklongsub, D McAdams. Methodological Approaches to the Analysis of Life Story Interviews. ARP. 2025. > PDF

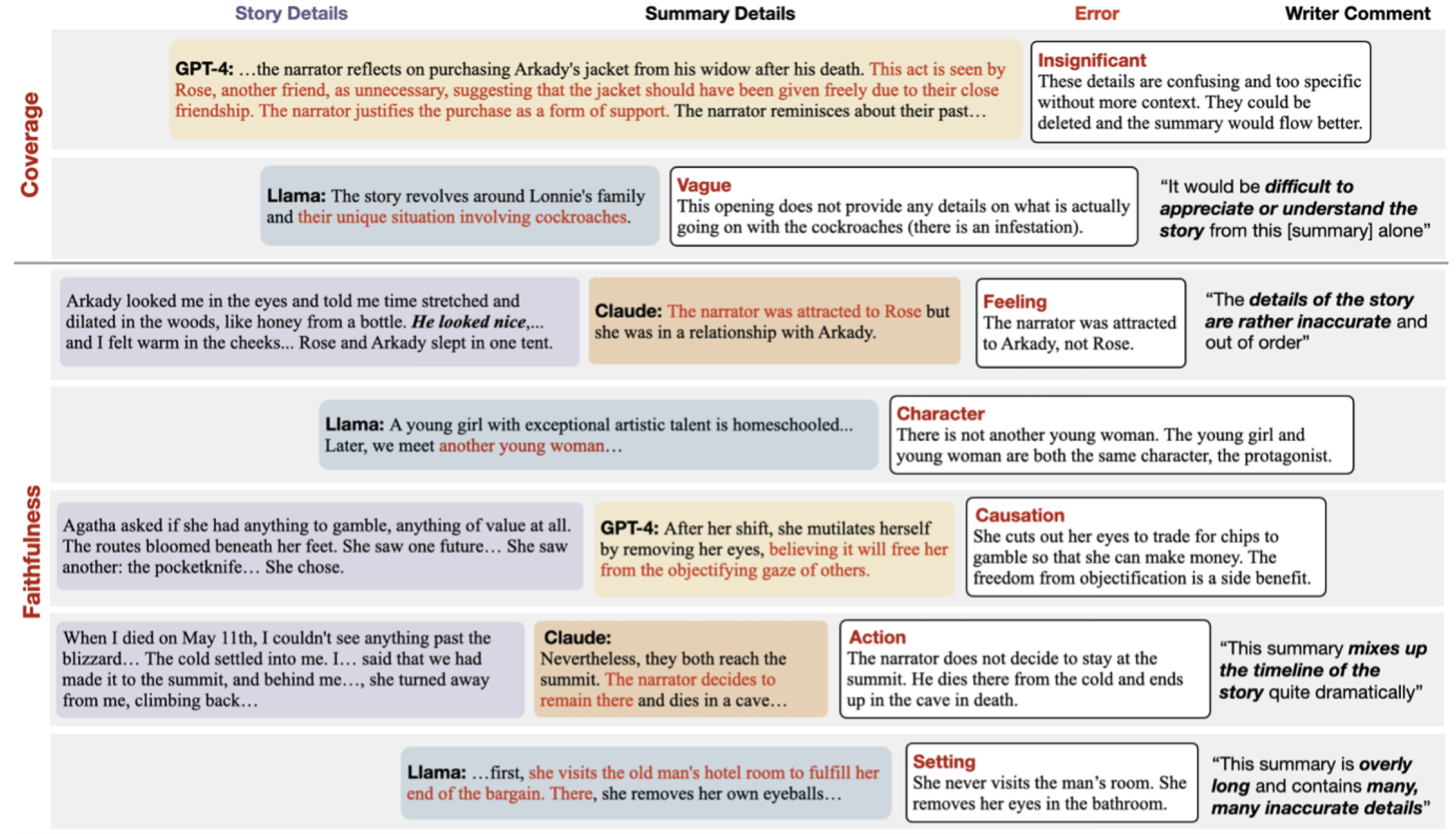

M Subbiah, F Ladhak, A Mishra, G Adams, LB Chilton, K McKeown. StorySumm: Evaluating Faithfulness in Story Summarization. EMNLP. 2024. > PDF

Best Paper Award

M Subbiah, S Zhang, LB Chilton, K McKeown. Reading Subtext: Evaluating Large Language Models on Short Story Summarization with Writers. TACL. 2024.

> PDF

A Storek, M Subbiah, K McKeown. Unsupervised Selective Rationalization with Noise Injection. ACL. 2023. > PDF

G Wang, L Chillrud, K Harwood, A Ananthram, M Subbiah, K McKeown. Check-COVID: Fact-Checking COVID-19 News Claims with Scientific Evidence. Findings of ACL. 2023. > PDF

M Subbiah*, A Bhattacharjee*, Y Hua, TS Kumarage, H Liu, K McKeown. Detecting Harmful Agendas in News Articles. WASSA Workshop at ACL. 2023. > PDF

Red Teaming for GPT-4. 2023. > PDF

S Levy, E Allaway, M Subbiah, L Chilton, D Patton, K McKeown, WY Wang. SafeText: A Benchmark for Exploring Physical Safety in Language Models. EMNLP. 2022. > PDF

A Mei, A Kabir, S Levy, M Subbiah, E Allaway, J Judge, D Patton, B Bimber, K McKeown, WY Wang. Mitigating Covertly Unsafe Text within Natural Language Systems. Findings of EMNLP. 2022. > PDF

M Subbiah, K McKeown. Understanding Identity Signalling in Persuasive Online Text. International Workshop on Social Sensing at ICWSM. 2021. > PDF

Co-organizer of ICLR Workshop on Enormous Language Models. 2021. > workshop website

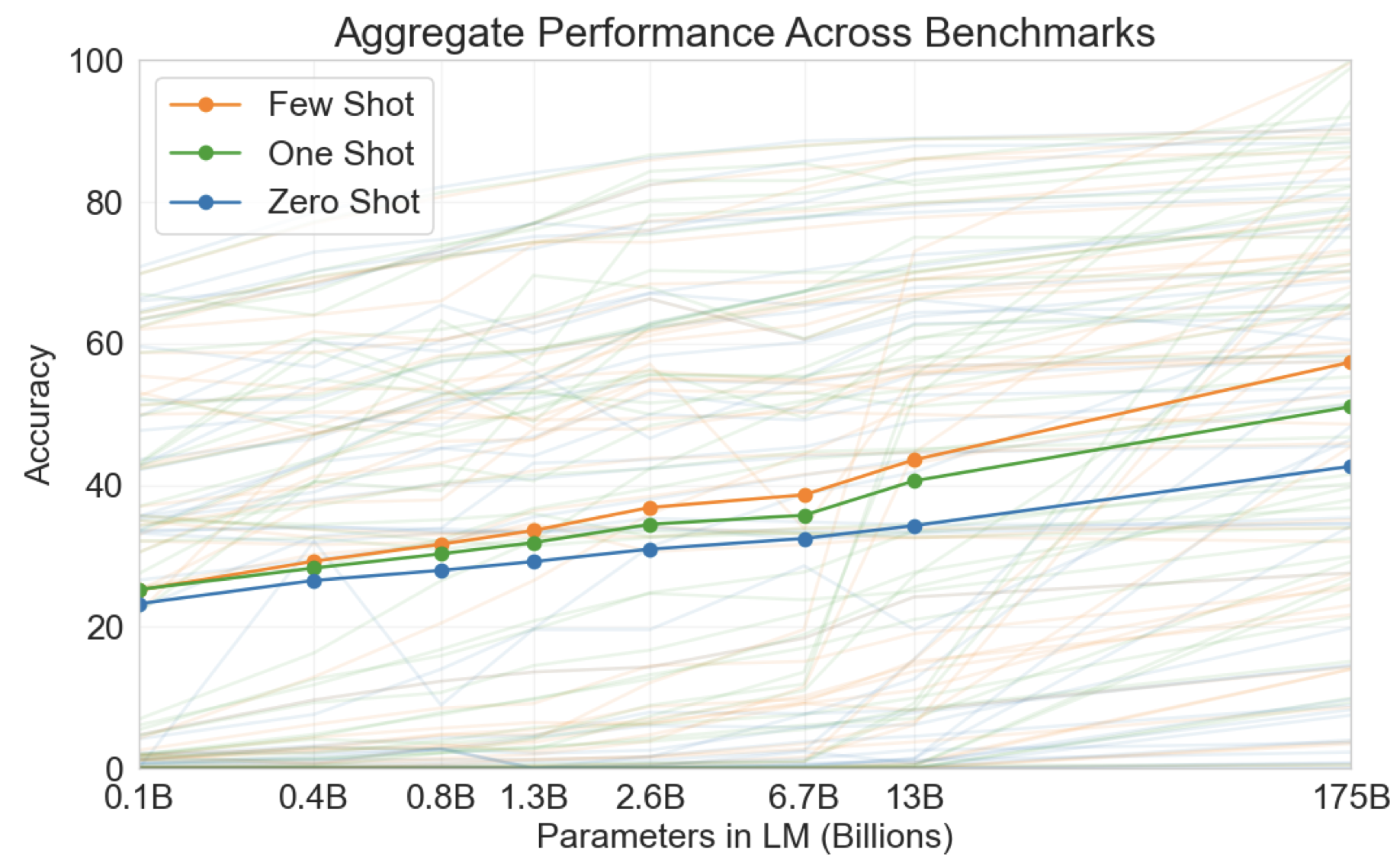

Outstanding Paper Award

TB Brown*, B Mann*, N Ryder*, M Subbiah*, et al. Language Models are Few-Shot Learners. NeurIPS. 2020.

> PDF

M Subbiah, et al. Augmenting Training Data with Simulated Images. Women in Machine Learning Workshop at NeurIPS. 2018.

M Maher, M Subbiah, N Apostoloff. Cascaded Dataset QA. Women in Machine Learning Workshop at NeurIPS. 2018.

UC Nygaard, Z Li, T Palys, B Jackson, M Subbiah, M Malipatlolla, V Sampath, H Maecker, MR Karagas, KC Nadeau. Cord blood T cell subpopulations and associations with maternal cadmium and arsenic exposures. PLoS One. > PDF

Speaking

Invited Computer Science colloquium talk at Williams College. 2024. > Event

Academy for Teachers' Master Class: What Is ChatGPT? How Do You Use It?. 2023. > Event

Wall Street Journal's The Journal: Artifial - Episode 2, Selling Out. 2023. > Podcast

PhD Candidacy Exam: Narrative Summarization. 2023. > Slides

Pearson Publishing's AI Catalyst Conference: NLP with ChatGPT. 2023. > Event

SuperDataScience podcast: GPT-3 for Natural Language Processing. 2022. > Podcast

Wired 5 Levels of Difficulty video: Machine Learning. YouTube. 2021. > Video

Invited seminar talk on GPT-3 at Stanford University. 2020. > Event

Invited seminar talk on GPT-3 at New York University. 2020. > Slides

Invited Computer Science colloquium talk at Williams College. 2020. > Event

Williams College Commencement Phi Beta Kappa speaker. 2017. > Video

Teaching

- Guest lecture for Computing in Context at Columbia

- Guest lecture for Global Teaching Labs at MIT - Uruguay

- Full instructor for Discrete Mathematics at Columbia

- Guest lecture for Computational Journalism at Columbia

- TA (and lecture) for Natural Language Generation and Summarization at Columbia

- CS Tutor for Columbia Athletics

- TA for Intro CS and Data Structures at Williams

Mentorship

- Columbia University research students - 3 undergraduate, and 7 masters

- Williams College CS undergrad mentor

- OpenAI Scholars program development

- OpenAI Scholars mentor

- Institute of International Education TechWomen mentor

- Williams College Underrepresented Identities in CS leader

Interests

- Natural Language Processing

- Language Models

- Narrative Understanding

- Reinforcement Learning

- Deep Learning

- Computational Creativity

- AI for Climate

- AI for Energy Efficiency

Profiles

Contact

I enjoy creating AI systems that are capable of interpreting and reasoning about human behavior and social dynamics. I value ethical and sustainable approaches to AI research.